In April 2023 I have collaborated with Wojciech Siostrzonek and a local university - Politechnika Śląska - to conduct a project under the supervision of a PhD. The project finished with a presentation at a conference that happened in 22th of September. Here is the link to our project repository and here is the english version of our conference poster.

We wanted to explore how reinforcement learning algorithms work: we created a little environment in which you could move a broom inside of a room to drop dirt inside mounds (they act like holes.)

The DDPG model behind our agent is described in this original paper.

Results

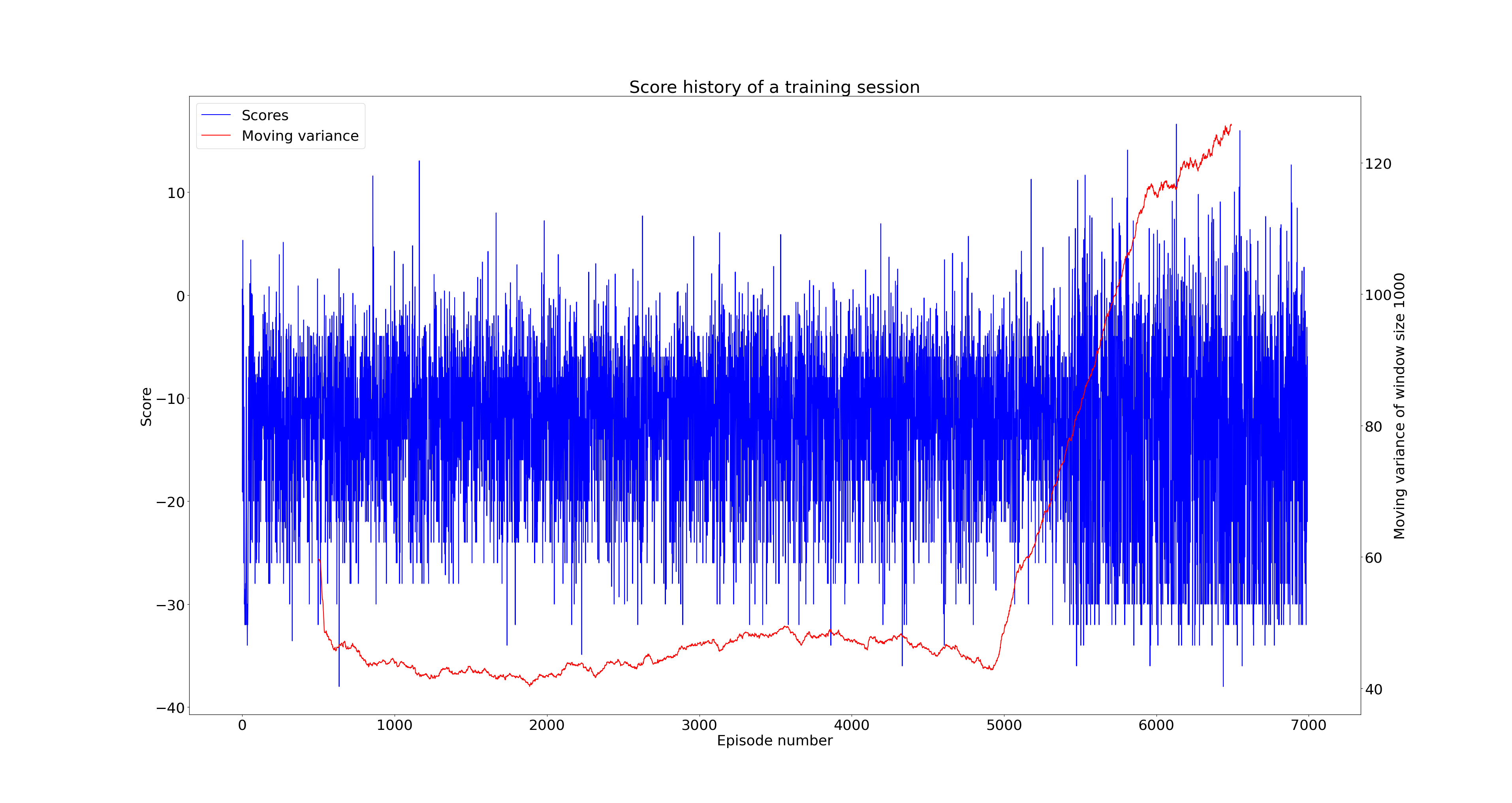

Our agent failed to learn to clean. The only weird thing was this anomaly of sudden increase in variation of scores we couldn’t reproduce in later experiments.

Discussion

I had some suspicions to whether the anomaly came from the cleaning agent appending his scores to some previous score history, but having analyzed the experiments and commit changes history, there is a really small chance for that.

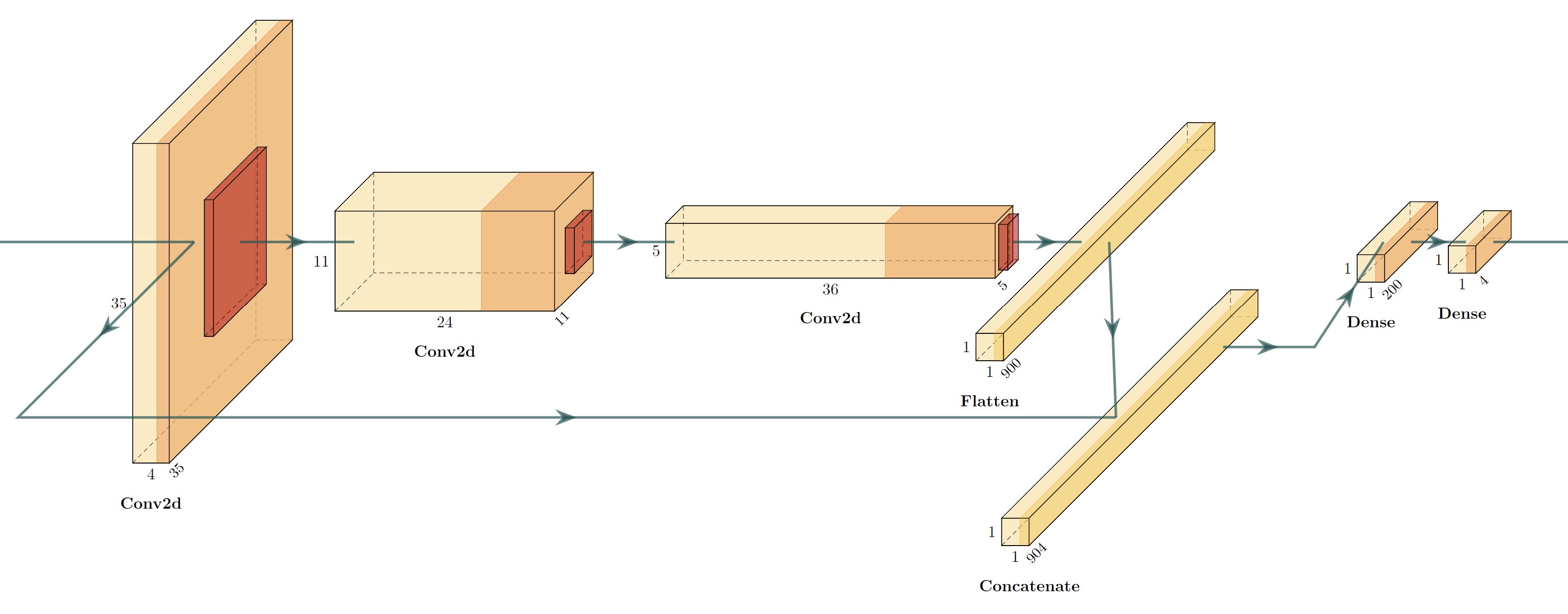

For future experiments we could try to change the Critic architecture: I thought about trying to connect the information about the previous action to be concatenated with the last layer instead of the one before it.

From one perspective more layers (2 in the existing implementation) allows the agent to recognize more sophisticated patterns, but from the other, the information about the move the Actor model has produced might be really significant and it somehow gets lost when concatenated with additional 900 values. Sometime in the future I’d like to explore this payoff of simplyfing these reasoning capabilities in order to improve algorithm’s convergence (or at least make it not be stuck in a local minimum, which in our experiments was e.g. constantly making moves about a diagonal where there is a low chance of hitting a wall, thus getting punished).